When you’re driving in a new area and get lost, what do you normally do to get back on the right path? Today, most drivers would take out their phones and use Apple Maps, Google Maps, or Waze. But before these navigation apps were around, people took a special interest in their surroundings and made a mental note of physical landmarks to make sense of where they were located. This is similar to how Simultaneous Localization and Mapping (SLAM) works for robots and other autonomous systems. As the name suggests, SLAM is a technology method that maps a new environment and localizes a robot within that map at the same time. SLAM software uses signs and markers (landmarks) on a Three-Dimensional (3D) map as reference points.

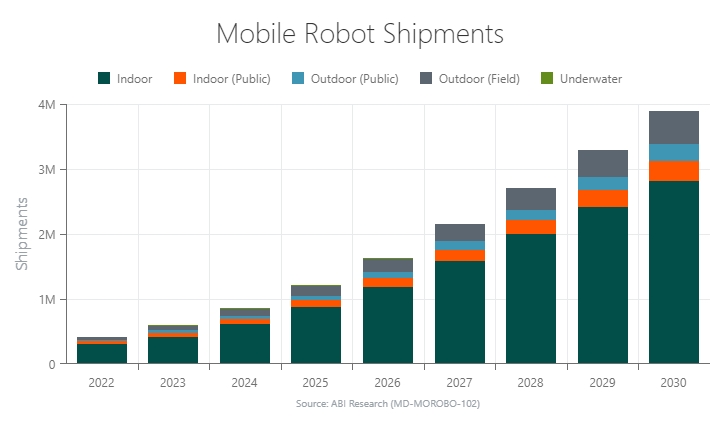

SLAM uses sensors, cameras, and algorithms to chart an optimal navigation path. As shipments for mobile robots, drones, and self-driving cars continue to increase with every passing year, SLAM techniques must further advance to accommodate novel applications.

What Is SLAM?

Simultaneous localization and mapping are the algorithms and techniques used for robots, drones, and other autonomous vehicles that enable you to map an unknown area and localize your vehicle within that map simultaneously. In other words, the robot or autonomous vehicle knows its location within an unfamiliar environment via markers and signs. As a result, the robot/vehicle always understands where it is and the safe path to its destination, allowing it to efficiently perform its task (e.g., transporting assets, drone delivery landing, etc.). Building a digital map and contextualizing the autonomous vehicle’s location on the 3D map empowers you to optimize navigation routes and avoid potentially dangerous situations.

How Does SLAM Work?

There are generally three hardware components a scanning device requires to perform SLAM:

- Sensor Probe: The machine requires a method to interact with its surroundings and capture features, which could involve using cameras, Light Detection and Ranging (LiDAR), radar, sonar, or Radio Frequency (RF)-based or sensor-fusion technologies.

- Motion Reference: To accurately position newly mapped features, the machine must perceive its own movement relative to the environment. This is primarily achieved using an Inertial Measurement Unit (IMU), but many solutions incorporate Global Positioning System (GPS) localization, Real-Time Kinematic (RTK) positioning, or known real-world reference points (commonly visible QR codes or RF tags placed in pre-mapped locations).

- Memory: The device needs to store previously mapped features, ensuring the map can be progressively developed.

The entire SLAM process is governed by statistics. The sensor probe believes a doorframe and window are present 10 meters away; the GPS and IMU systems believe the robot has traveled 5 meters from the last doorframe; and the map in memory, constantly updated on receipt of new data, believes it has seen that doorframe and window before.

All measurements have an associated probability that take into account sensor noise and drift over time. If the sensor knows with a high degree of reliability that the doorframe and window are where it thinks they are and the map-in-memory is confident that it has seen this combination before, the SLAM algorithm will perform a loop closure. This is where the entire map-in-memory is adjusted to accommodate new (trustworthy) information and remove any positional drift or errors that have accumulated over time.

Sensor fusion and filters are used on incoming data from sensor probes and references to improve the accuracy and reliability of measurements.

SLAM also includes a software solution where the measurement/geometric data are extracted and interpreted. Further, mapping software searches for features like edges and vertices, which are used to formulate reference points within the digital map. Several software providers leverage Artificial Intelligence (AI) and Machine Vision (MV) to identify objects and structures in the environment.

The fallibility and uncertainty surrounding SLAM solutions gives rise to a host of competing algorithms and techniques. Each solution strives to produce the most accurate mapping solution faster and with a higher resolution.

Two common SLAM types include LiDAR SLAM and visual SLAM (vSLAM).

Types of SLAM

The most common range measurement devices (sensors) for SLAM are LiDAR sensors or cameras. While other sensors exist, such as radar and sonar, LiDAR SLAM and visual SLAM (vSLAM), which relies on cameras, are the most popular forms. Many approaches also fuse data from different sensors.

LiDAR SLAM

3D LiDAR systems can send hundreds of thousands of laser pulses into a small region per second and measure the time it takes for the light to return. This SLAM technique provides distance measurement to a surface point. When combined with an Inertial Measurement Unit (IMU) for movement reference, LiDAR can produce an accurate and dense point cloud. Point clouds are then parsed to identify features (reference points) that are stored in memory.

Although Elon Musk and others are not fans, LiDAR SLAM is commonly used by autonomous vehicle companies like Waymo. The key advantage of LiDAR is its ability to provide ultra-accurate measurements, which are essential for driver safety. While it can penetrate through foliage, which is great for surveying or other outdoor applications, LiDAR sensors do not perform very well in wet conditions (rain, fog, snow).

LiDAR is still relatively expensive, which drives up costs for SLAM hardware. In fact, this is one of the key reasons why ABI Research expects significant growth for the more affordable vSLAM algorithms. However, as LiDAR prices decrease, ABI Research expects these sensors to become more readily available across industries.

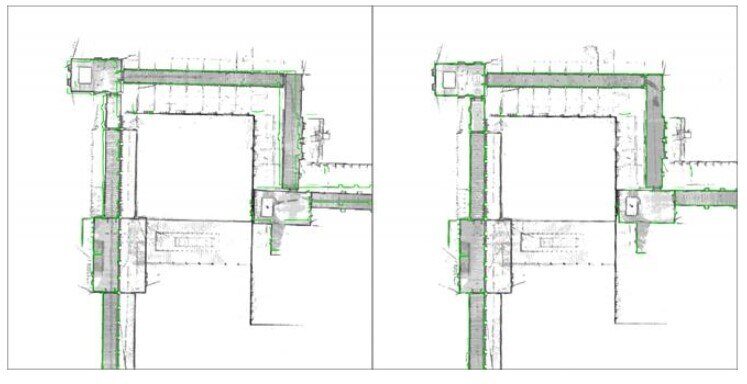

Figure 1 highlights the value of NavVis’ precision SLAM technology. The green lines represent the true locations of the walls. On the left, it is possible to see that small drifts in angle can generate large errors in long hallways. This has been corrected in the right panel using NavVis’ improved algorithm.

Figure 1: NavVis’ Precision SLAM Technology

Visual SLAM

Visual SLAM uses monocular (one) or stereo (two) cameras to perform localization and mapping. The vSLAM scanning device identifies environmental factors like edges and vertices and then makes point references within the 3D model of the environment. In turn, the simultaneous localization and mapping software can accurately gauge the depth of an object within the 3D image.

Although stereo cameras can return 3D data by design, monocular cameras have to rely on photogrammetry to gain depth information. Photogrammetry for monocular cameras includes the popular structure-from-motion algorithm, which uses differences in successive image frames to simultaneously infer the location of objects in three-dimensional space.

Due to its reliance on visual information, vSLAM is a good match for well-lit settings, such as supermarkets, large stores, hotels, and hospitals.

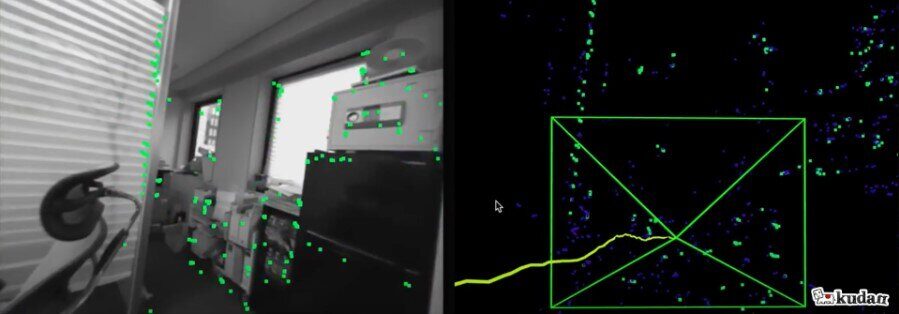

Figure 2: Kudan Visual SLAM Solution

SLAM Applications

Simultaneous localization and mapping is currently used in a wide range of robot and other autonomous applications today. These applications range across various industries, such as retail, warehousing, home cleaning, lawn care, drone delivery, and autonomous vehicles.

Here is how SLAM plays an essential role in 7 key applications.

1. Inventory Counting

According to an Auburn University study, inventory accuracy is just 65% in brick-and-mortar stores. Autonomous robots equipped with machine vision cameras can help solve this retail challenge by scanning store shelves more efficiently and reliably than human workers. These robots leverage SLAM to map and localize the supermarket or retail store. Simultaneous localization and mapping ensures that the robot knows its location within the store and can smoothly traverse to an aisle requiring inventory scanning.

Simbe Robotics’ Tally robot is a good example of SLAM in action. Tally uses SLAM to create a digital map of the store and all the products on the shelves. From there, the autonomous mobile robot regularly browses the aisles to track inventory changes over time using a secondary suite of machine vision algorithms. When a shelf is empty, Tally sends an alert to store employees so they can swiftly restock the shelf. Tally also minimizes shrinkage by identifying potential theft within a store because it knows which products left the building without being paid for at checkout.

2. Transport

One of industrial robots' most common use cases is transporting assets from one area to another. SLAM maps out a facility so the robot or self-driving forklift can navigate without bumping into obstacles like walls and shelves.

A warehouse is an easy example to envision. A robot automates repetitive, physically strenuous tasks like transporting a pallet of boxes to a truck. Without SLAM algorithms, the robot would lack localized contextualization, resulting in inefficient and unsafe navigation.

SLAM is often combined with LiDAR sensors in industrial robot applications, but is gaining traction for the cheaper visual-only SLAM. Another example is healthcare robots that assist hospital staff in medical operations. When patients' lives are possibly on the line, SLAM is vital for the robot to quickly transport necessary tools and other items to the surgery room.

Often, if an Autonomous Mobile Robot (AMR) is operating in a known environment like a warehouse, a highly accurate 3D map is created before the AMR deployment as a reliable reference. RF tags and QR stickers can also act as trustworthy reference points to align a robot’s SLAM map in these environments.

3. Retail Store Cleaning

Cleaning robots are another type of AMR that leverages SLAM. Like inventory scanning robots, cleaning robots must be capable of efficiently traversing a store’s aisles. For example, more than 6,5000 of Brain Corp’s Tennant cleaning robots are in operation. The company’s robots avoid obstacles and optimize cleaning paths with SLAM, LiDAR sensors, and advanced Artificial Intelligence (AI)-based cameras.

Localizing the robot’s whereabouts within a store map ensures that cleaning robots do not clean the same spot on the floor twice. On the retail side, companies like Walmart and Ahold Delhaize have shown interest in “robot janitors.”

Figure 3: Brain Corp Autonomous Scrubber Robot

4. Household Robots

SLAM is primarily used in household applications for floor care devices and lawn mowers. These robotic machines use sensors like lasers and cameras to build an initial map of the environment and construct a floor plan for optimized navigation routes.

Before LiDAR sensor prices were relatively affordable, autonomous floor care robots performed their internal mapping by randomly moving about the floor and identifying obstacles only by bumping into them. It goes without saying that this is not the greatest way for a robot to map a floor plan and clean it. Today, vSLAM is commonly used by autonomous vacuum cleaners, as these devices are not process power-hungry.

SLAM is also a core component for autonomously cutting your lawn. In this use case, you have to install a wire along the yard barrier or you can create a geofence using GPS. From there, the lawn mower bot knows the boundaries within which it must cut. ABI Research believes visual data is a sizable opportunity for robotic lawn mower manufacturers as vSLAM algorithms have evolved. SLAM systems will combine visual sensor data with GPS and EKS base station data for a robust outdoor solution.

5. Drones

SLAM is a key facilitator for drone use cases. While commercial delivery drones can use the Global Navigation Satellite System (GNSS) to fly from a distribution center to the customer’s address, GNSS cannot provide the centimeter-level location accuracy required for landing in a safe spot. Moreover, LiDAR scanners enable a drone to quickly maneuver if the SLAM solution recognizes a landmark or if an object is in the way.

Beyond commercial delivery, simultaneous localization and mapping enables drone operators to perform search and rescue in disaster zones, survey a canyon, surveil an underground mine, etc.

6. Real Estate

Surveying real estate properties can often be laborious and time-consuming, especially for large building developments. Therefore, anything that can streamline the surveying process will be a welcome addition to the real estate industry. LiDAR SLAM can ease the surveying process by providing accurate information about a building very quickly.

For example, a single LIFA employee surveyed a 13-story residential building in Vejle, Denmark, using the handheld GeoSLAM ZEB-REVO LiDAR laser scanner. The survey only took an hour and a half to complete—one hour designing walking routes and half an hour performing the scanning. Without using SLAM algorithms, the surveying process in this example would have taken 1 to 2 days and required several people.

LiDAR’s ability to create high-resolution and accurate 3D models of environments is also beneficial for architects, site managers, and construction companies. They can use SLAM-based scans to optimally design a building or make renovations. The only drawback of SLAM in real estate is that some applications require millimeter-level accuracy, whereas it only achieves centimeter-level accuracy.

7. Autonomous Vehicles

To operate safely, a self-driving car must be able to understand its geolocation and immediate surroundings. It must also map the dynamic aspects of the environment, such as people, animals, objects on the road, diversions, parking spaces, etc.

Although high-precision GNSS will play a partial role in autonomous vehicles, perception must still exist in areas lacking GNSS support. Therefore, SLAM is a prerequisite for autonomous vehicles (SAE Level 3 and above). SLAM algorithms establish a relationship between the vehicle and the objects around it for safe, efficient navigation.

SLAM Innovation

The shift from Automated Guided Vehicles (AGVs) to Autonomous Mobile Robots (AMRs) is driven by the flexibility and programmability of SLAM navigation. Unlike AGVs, which rely on magnetic tracks for navigation and 2D LiDAR for safety, AMRs using SLAM can adapt to dynamic environments without dedicated infrastructure. This deployment simplicity makes an autonomous mobile robot a more attractive option to end users.

Visual SLAM is emerging as a cost-effective alternative to traditional LiDAR SLAM. The technology utilizes cameras and proprietary software to address challenges like motion blur and varying lighting conditions. A vSLAM approach not only reduces computational costs and Bill of Materials (BOM), but also enhances data capture for machine vision applications. Consequently, the robotics market is seeing increased enterprise adoption of MV SLAM methodologies. As a result, new robot products offer solutions for inventory management, object tracking, automated inspection, and mapping.

My team and I observe forward-thinking Software-as-a-Service (SaaS) companies like Slamcore, RGo Robotics, and Artisense offering high-resolution vSLAM sensor fusion for robots and drones. Sensor fusion combines cameras with LiDAR to create a robust, accurate, and reliable SLAM solution. While sensor fusion is not as cheap as a visual-only approach, it does eradicate the shortcomings you will experience using only cameras or LiDAR.

To learn more about how simultaneous localization and mapping and other perception techniques are shaping the future of robotics, download ABI Research’s Robotics Software: Mobile Robot Perception for Enterprise research report.