Enterprise data fabric is gaining significant attention in today’s digitally-driven world as enterprises require a holistic approach to data storage and integration. As enterprises introduce more technologies to their operations, their data generation will continue to increase. This makes collating, storing, and contextualizing data more challenging. An enterprise data fabric unifies data across departments and business units, enabling users to draw connections that would otherwise go unnoticed.

But a key consideration is where your enterprise data fabric should be deployed: in the cloud or on-premises? Each has its own advantages and disadvantages. For example, on-premises data fabric provides enterprises more control and enhanced security, but it comes with high costs and low scalability. Conversely, a cloud-based data fabric is easily scalable and offers affordable pay-as-you-go pricing models, but it comes with greater security risks and little control.

For these reasons, a hybrid cloud approach to enterprise data fabric has emerged. A data fabric supporting both on-premises and cloud deployments offers you flexibility, particularly for those enterprises requiring a balance between cloud scalability and on-premises control.

ABI Research takes a two-pronged approach to evaluating the hybrid cloud market. Our Hybrid Cloud & 5G Markets Research Service identifies enterprises’ specific requirements for data storage, management, and integration, while our Next-Gen Hybrid Cloud Solutions Research Service assesses the forward-looking technologies facilitating data integration and the vendors offering these solutions.

What Is an Enterprise Data Fabric?

An enterprise data fabric is an integration architecture that unifies data collected from various business units. Organizations have historically struggled to make data accessible to all stakeholders, making it more difficult to draw meaningful insight from data lakes (that have often degenerated into data swamps), data warehouses, and other databases. A data fabric solves this issue by creating a centralized platform for users across various departments to access essential data. Whether data originates in the hybrid cloud, on-premises, or at the edge, a data fabric aggregates it.

Crucially, a data fabric eliminates data silos that typically hinder an enterprise’s decision-making capabilities. In turn, enterprises can extract more value from their data because they have a window into other business units. As organizations continue to digitally transform and generate greater quantities of data, the role of a data fabric is all the more critical. Without a data fabric, organizations will miss out on identifying key trends and patterns that form effective business strategies.

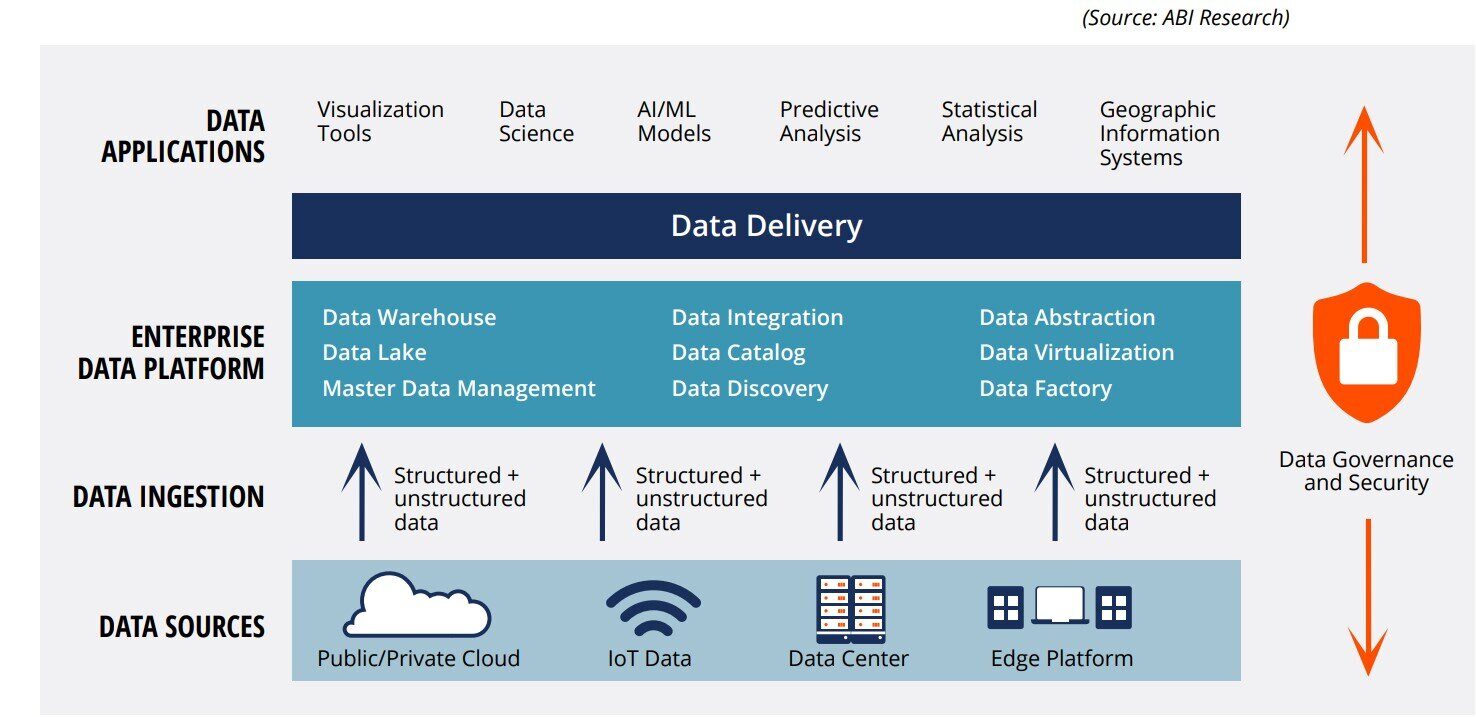

An enterprise data fabric architecture has four main parts:

- Data Source: Where the data comes from, like cloud storage, on-site data centers, edge devices, and Internet of Things (IoT) devices.

- Data Ingestion: This layer collects data from different sources, processes it, and gets it ready for use.

- Data Platform: This is the system that manages the data, including tools for storing, integrating, organizing, and processing the data to extract useful insights.

- Data Application: Here, businesses use tools to visualize and analyze the data, often with Artificial Intelligence (AI) and Machine Learning (ML), to spot trends and patterns. Microsoft highlighted this at its 2024 Build Conference.

Figure 1: Example of Enterprise Data Fabric Framework

Related Content:

Key Insights from IMTS: Recommendations for Data Fabric Vendors to Win Over Industrial Enterprises

Building An Enterprise Data Fabric in the Hybrid Cloud

Data integration solutions will be most effective when built with a hybrid cloud approach. Hybrid cloud computing unifies enterprise data through a combination of public/private cloud platforms, edge deployments, and on-premises infrastructure.

The hybrid cloud approach to enterprise data fabric enables organizations to benefit from the scalability and advanced tools cloud computing offers. Concurrently, edge computing distributes local processing for edge servers, devices, and sensors. This results in improved efficiency for data-intensive applications, such as Automated Guided Vehicles (AGVs).

A hybrid cloud model offers enterprises the flexibility to scale their data infrastructure according to budget constraints and computing requirements, while providing the option to run applications on-premises when needed. Managing a hybrid cloud requires edge-to-cloud orchestration, where computing power and data storage are distributed between cloud platforms and local edge networks depending on business priorities. This allows organizations to combine cloud environments' scalability with edge devices' real-time responsiveness.

For example, critical data like equipment maintenance logs might be stored on-premises or processed at the edge. At the same time, non-sensitive files can be moved to public cloud storage for cost-efficiency. This balanced approach provides enhanced control over data management, ensuring security and agility. Additionally, public cloud infrastructure is often used for tasks requiring extensive computational resources, such as training Large Language Models (LLMs). This has been seen in Amazon Web Services’ (AWS) Industrial Data Fabric solution.

Enterprise Data Fabric Vendors

Some of the leading data fabric vendors targeting enterprises are listed below.

- Hewlett Packard Enterprise (HPE) Ezmeral Data Fabric provides unified data visibility and management across cloud, on-premises, and edge environments. This technology combination eliminates silos and addressing performance, compliance, and cost concerns.

- IBM Cloud Pak for Data is a modular suite that combines data governance, security, integration, and analytics tools, including Db2 and watsonx.data, designed for tasks like Retrieval-Augmented Generation (RAG) applications.

- Hitachi Vantara’s DataOps platform offers a single platform for integrating, governing, and managing data, along with the Pentaho app for accessing and processing data from major cloud providers.

- Informatica’s Intelligent Data Management Cloud (IDMC) integrates data cataloging, data quality, and master data management with AI-powered automation. The solution thereby boosts data discovery and productivity through its CLAIRE solution.

- TIBCO Platform delivers real-time data management with applications for data integration, Application Programming Interface (API) connectivity, and event processing. It features over 200 pre-built connectors for seamless interaction with platforms like Microsoft, Oracle, Salesforce, and SAP.

Best Practices for Enterprise Data Fabric Platform Providers

Data quality, management, and sovereignty are essential for digital transformation. For example, enterprise Generative Artificial Intelligence (Gen AI) use cases rely on accurate and timely data integration to train LLMs. If an LLM does not have access to critical data, the output will be sub-optimal. An enterprise data fabric collects data from the sources you need it from flexibly and securely.

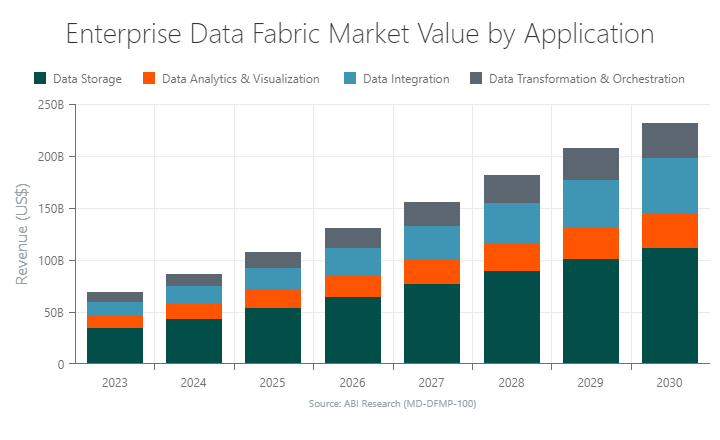

By 2030, ABI Research forecasts enterprise data fabric to be a US$232 billion opportunity—a three-fold increase compared to 2023. Data storage applications will drive the most revenue for data fabric solutions, making up nearly half of the market.

In building and marketing an enterprise data fabric offering, solution providers should take the following four steps::

1.) Enhance data integration and analytics capabilities to expand the number of use cases a data fabric can address.2.) Target specific use cases such as productivity and quality control to improve the value proposition of your solution.

3.) Focus on multi-/hybrid cloud support because enterprises often use multiple cloud service providers and store/manage data in different environments.

4.) Align your platform with business goals such as improving customer service, sales outreach, and accelerated product development.

Download ABI Research’s Integrating Enterprise Data With A Hybrid Cloud Approach whitepaper to better assess the capabilities, enabling technologies, and solution providers of data fabrics.

About the Authors

Yih-Khai Wong, Principal Analyst

Yih-Khai Wong, Principal Analyst

Yih-Khai Wong is a Principal Analyst in the Strategic Technologies team. He is responsible for the distributed & edge computing research service, covering the evolution of processing platforms that handles various IT and OT workloads across the public, private, edge, and on-premises cloud.

Leo Gergs, Principal Analyst

Leo Gergs, Principal Analyst

Leo Gergs leads the research in 5G Markets, including 5G monetization models. While this also includes success stories in the consumer domain, his research focuses on cellular revenue opportunities for enterprise connectivity. Within this context, he leads ABI Research’s work on private cellular networks and the role of hyperscalers, infrastructure vendors, Communication Service Providers (CSPs), and System Integrators (SIs) for enterprise connectivity.